Most founders do not fail because they cannot build.

They fail because they build first, then look for validation after months of work, when changing direction is expensive and emotionally hard. A few early compliments turn into confidence, the roadmap grows, and the team ships something that looks real but does not get used.

If you are trying to figure out how to validate an MVP, the goal is not to prove your idea is brilliant. The goal is to prove one simple thing before you write code. Real people have the problem, they feel it often, and they will change their behavior to solve it. That is the difference between demand and polite interest.

Good market validation is practical. You confirm the user and the pain, identify the workaround they already use, and test whether they will take a real action, such as sign up, book a call, pay, or repeat a task.

Once you have that signal, you can build with confidence. Without it, you are mostly guessing.

To validate an MVP, run the smallest test that proves behavior, not opinions. Start by confirming the pain and the current workaround with 10 to 15 target users. Then run one action-based test, a waitlist signup, a demo request, a paid pilot, or a concierge version. If people take the action and come back, you have market validation. If they only say it is interesting, you still have a hypothesis.

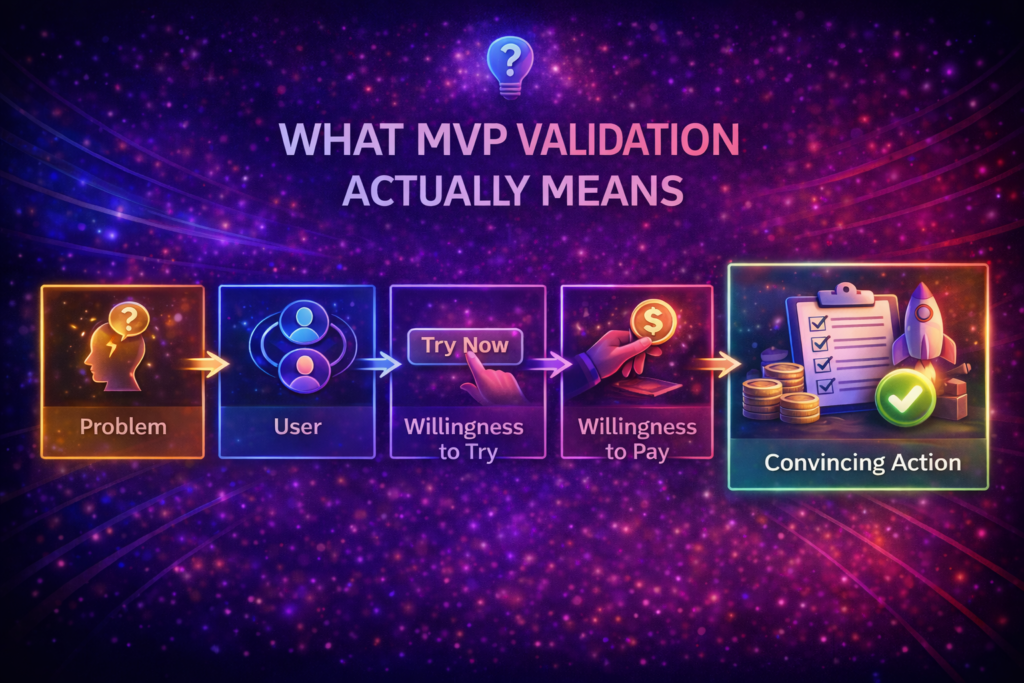

What MVP Validation Actually Means

Validation is not collecting praise. It is reducing uncertainty with evidence that changes what you do next. The evidence you want is simple. A real user with a real problem takes a real action, without you pushing them, and that action repeats.

If you are serious about how to validate an MVP, define the exact risk you are trying to remove before you run tests. Are you validating the problem, the user, the willingness to try, or the willingness to pay. Mixing these creates confusion because the signals look good while the decision stays unclear.

One practical way to validate a startup idea is to pick a single behavior that would convince you the idea is worth building, then run the smallest test that can produce that behavior. That might be signups from the right audience, repeat usage in a pilot, or a paid commitment. Clear signals make decisions easier. Vague feedback does not.

This is also where many teams get trapped by labels. If you are not sure what should count as an MVP versus an early prototype or concept, it helps to anchor your thinking around what actually counts as an MVP so you are validating the right thing, not just something that looks like progress.

Why Market Validation Saves You Months and Budget

Building is the most expensive way to learn. Once code exists, every change has a cost attached, such as redesign, rework, retesting, and weeks of delay. Market validation flips that around. It helps you confirm the problem, the user, and the willingness to act before you commit to a build that is hard to unwind.

It matters because most businesses do not get unlimited attempts. The U.S. Bureau of Labor Statistics reported that only 34.7% of private sector business establishments born in March 2013 were still operating in March 2023. That is a reminder that time and budget are finite, and the fastest way to waste both is building the wrong thing first.

When you validate early, you spend your effort on the highest leverage questions.

- Is the pain real today?

- What workaround proves it?

- Will people take real action to solve it?

Those answers make your scope tighter, your roadmap calmer, and your build decisions much easier to defend.

Start with the Fastest Validation Tests

The fastest way to validate a startup idea is to choose tests that force real action. Opinions are cheap. Actions cost time, attention, or money, which is why they produce cleaner signals. Start with the lowest effort test that can still prove behavior, then level up only if the signal is unclear.

Most early validation fails because the test is too light to mean anything. A friend saying it sounds cool is not a signal. Even a user saying they would use it is not a signal.

The test has to create a small cost for the user, time, effort, or money, so you can see whether the problem is strong enough to drive action. That is the point of starting with fast tests. They help you earn evidence before you earn complexity.

1) Smoke Test Landing Page

A simple page can validate demand without building the product. Focus on one promise, one audience, and one action. Count conversion from the right traffic source, not random visits. Strong signal looks like consistent signups or demo requests without heavy follow-up.

- A clear outcome that users want

- One action button that matters

- A short form that feels easy

2) Problem Interviews that Do Not Bias the Answer

Most interviews fail because the questions lead the witness. Keep it grounded in real past behavior. Ask for stories, not opinions. The goal is to confirm that the pain is frequent, costly, and already being solved in some messy way.

- Tell me about the last time this happened

- Show me how you handle it

- What did it cost you in time or money?

3) Concierge and Wizard of Oz Tests

This is how to validate an MVP without automation. Deliver the outcome manually behind the scenes while the user experience looks real enough to test behavior. If people return, pay, or refer others, you have pull. If they do not, you saved months of building.

- Manual delivery of the result

- A simple front-end to trigger the workflow

- Tracking repeat usage and willingness to pay

Prototype, Proof of Concept, or MVP

Validation gets easier when you pick the right type of build for the risk you are trying to remove. A lot of teams waste time because they build an MVP when they only need clarity, or they build a prototype when the real risk is technical feasibility. The goal is not to build more. The goal is to learn the right thing with the smallest artifact.

This is where choosing the right early build keeps you from mixing stages and measuring the wrong signal.

1) Use a Prototype When the Risk is Clarity

Build a prototype when you need to see whether users understand the flow and the value. It should be quick, easy to change, and focused on the main journey. Success looks like users moving through it without explanation and describing the value back in their own words.

2) Use a Proof of Concept When the Risk is Feasibility

Build a proof of concept when the risky part is technical. The goal is to remove engineering uncertainty by testing the most uncertain component under realistic constraints.

- Can the integration work with real access and real data?

- Can the model perform well enough for the use case?

- Can the system handle load, latency, or other key constraints?

Success looks like reliable feasibility plus clear limits, so you can estimate what it will take to ship it in production.

3) Use an MVP When the Risk is Adoption

Build an MVP when you need proof of usage. It should let real users complete one job end-to-end, then produce measurable behavior. Success looks like users completing the core action, returning without reminders, and showing willingness to pay, commit, or expand usage.

A Simple MVP Validation Checklist You Can Run in a Week

If you are serious about how to validate an MVP, this one-week checklist keeps the work focused and measurable. The goal is not to collect more feedback. The goal is to earn one clear signal that tells you whether the idea is worth building.

- You can name one user and one job in one sentence

- The pain shows up often, and it costs time, money, or missed opportunities

- You can point to a real workaround they use

- You can reach at least 10 target users again within 7 days

- You have one measurable success signal, not a list of metrics

- You have one action-based test ready, waitlist signup, demo request, paid pilot, or concierge flow

- You defined a stop or pivot trigger before you run the test

If you can tick these off, you are not guessing. You are running an MVP validation checklist that produces a clear go or no-go signal before you spend weeks building.

How Validation Connects to Time and Cost

Validation is not a separate phase. It is what keeps your build plan stable. When you validate early, you lock the user, the job, and the signal you are chasing. That reduces scope changes mid-build, which is where timelines and budgets usually get wrecked.

It also makes planning easier because you are not estimating a vague idea. You are estimating a specific workflow with clear boundaries. That is the difference between guessing and setting a realistic build timeline before you commit.

Cost works the same way. The more uncertainty you carry into development, the more you pay in rework later. Validation helps you narrow what version one must include, what can wait, and what does not matter. That is how you start budgeting without guessing, instead of pricing a full product by accident.

Common Validation Mistakes Founders Make

When validation is done well, it reduces risk and makes decisions easier. When it is done poorly, it creates false confidence. You start building with certainty that is not backed by behavior. That is when teams ship, hear silence, and then scramble to patch the product with more features.

Most mistakes here are not technical. They are measurement mistakes. The test looks active, with interviews, clicks, calls, and feedback, but it does not answer the real question. If you care about how to validate an MVP, every test needs one clear signal and one clear decision tied to it. Otherwise, you are collecting noise that feels like progress.

Most validation mistakes happen when the test feels productive but does not create a decision. These are the traps that waste the most time.

- Asking leading questions and getting polite yes answers

- Testing with the wrong audience because they are easier to reach

- Counting interest instead of behavior, especially clicks and compliments

- Running tests without a clear success signal or stop trigger

- Building too much before any action-based proof exists

- Ignoring churn signals in pilots and calling it product-market fit

- Adding features to fix uncertainty instead of narrowing the question

Conclusion

If you want to validate a startup idea, focus on behavior over opinions. Confirm the pain, confirm the workaround, then run the smallest test that forces a real action. When the signal is clear, building becomes a focused delivery job instead of a guessing game.

The simplest way to remember how to validate an MVP is this. One user. One job. One measurable signal. Validate that first, then build the smallest version that can deliver it end-to-end. When you work this way, timelines calm down, budgets stay predictable, and you avoid building a full product before you have proof anyone actually wants it.

FAQs

Q1. How many interviews do I need to validate a startup idea?

A1. For most early decisions, 10 to 15 interviews with the right target users is enough to spot a clear pattern and a real workaround.

Q2. What counts as proof when you validate a startup idea?

A2. A real action from the right user. Signing up, booking a call, committing to a pilot, paying, or repeating the behavior without reminders.

Q3. Can I do market validation without the ads?

A3. Yes. Use direct outreach, communities, partnerships, existing networks, or a small waitlist page shared to targeted users. The signal matters more than the channel.

Q4. When should I stop validating and start building?

A4. Start building when you can name the user and job clearly, users confirm the pain and workaround, and your test produces a repeatable action signal.

Q5. How do I validate pricing early?

A5. Ask for a paid pilot, a deposit, or a pre-order. If people avoid any money commitment, pricing is not validated yet.

Q6. What if people say it is interesting, but do nothing?

A6. Treat that as a no. Adjust the audience, the problem, or the promise, then rerun a smaller test focused on one action.